GPTQ vs GGML vs Base Models: A Quick Speed and VRAM Test for Vicuna-33B on 2x A100 80GB SXM

Table of Contents

Summary #

If you’re looking for a specific open-source LLM, you’ll see that there are lots of variations of it. GPTQ versions, GGML versions, HF/base versions. Which version should you use? As a general rule: Use GPTQ if you have a lot of VRAM, use GGML if you have minimal VRAM, and use the base HuggingFace model if you want the original model without any possible negligible intelligence loss from quantization.

Note that GGML is working on improved GPU support.

Generally quantized models are both faster and require less VRAM, but they can be very slightly less intelligent.1 4-bit, 5-bit, or 6-bit seem like sweetspots for many use cases.

SuperHOT allows for 8K context size rather than 2K.

Comparison of Model Speed and VRAM usage #

| Model | Tokens/Sec | VRAM Used at Idle (GB) | VRAM Used During Inference (GB) | CPU Utilization During Inference (%) |

|---|---|---|---|---|

| 🏆 TheBloke/Vicuna-33B-1-3-SuperHOT-8K-GPTQ | 15.76 | 22 | 24 | 0 |

| TheBloke/Vicuna-33B-1-3-SuperHOT-8K-fp16 | 13.24 | 62 | 64 | 3 |

| TheBloke/vicuna-33B-GPTQ | 11.77 | 26 | 22 | 3 |

| lmsys/vicuna-33b-v1.3 | 7.08 | 62 | 62 | 3 |

| TheBloke/vicuna-33B-GGML | 0.33 | 6 | 22 | 99 |

| TheBloke/Vicuna-33B-1-3-SuperHOT-8K-GGML | N/A | 22 | 22 | 100 |

| Yhyu13/vicuna-33b-v1.3-gptq-4bit | N/A | 13 | N/A | N/A |

Notes on these results #

Your GGML figures are surprisingly low - possibly the reason you got such poor performance is because it was trying to split it across two GPUs, which is generally slower. I would limit that to one GPU only. In general there must be some issue with those GGML performance figures, it should do much better than that

It’s worth mentioning that although it’s a 2x A100 system, you were only using one GPU for most of the tests (which is good - single-prompt multi-GPU inference is slower than single GPU, often much slower).

You should test ExLlama as well, which will be significantly faster than AutoGPTQ. Most people are using ExLlama for GPTQ Llama models now, and I would expect 50+ tokens/s if not more on that hardware

– TheBloke

System Specifications and Settings #

- Processor: 32 vCPU

- RAM: 503 GB

- Graphics Card: 2 x A100 SXM 80GB

Client: text-generation-webui by oobabooga. (P.S. It’s oobabooga not oogabooga, I got it wrong for a while!)

Settings: default (simple-1) with a temperature of 0.7, top_p of 0.9, top_k of 20, and max_tokens of 200.

Streaming on, which may have significantly slowed performance.

Prompt #

A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user’s questions.

USER: Please write a python program that takes a text file with one item per line, and then shows the user 2 items at a time, and asks the users to vote on the winner, basically creating an elo score of sorts for each fo the ideas. The elo scores are saved in the file, so the user can either resume from existing elo scores, or if there are no elo scores in the text file, it’ll create them and add them to the right line in the file as it goes.

ASSISTANT:

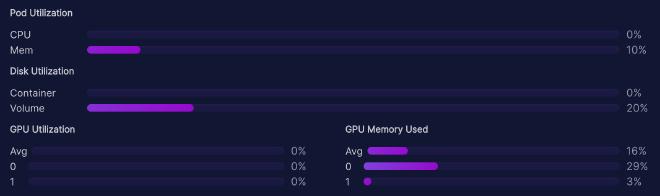

TheBloke/Vicuna-33B-1-3-SuperHOT-8K-GPTQ #

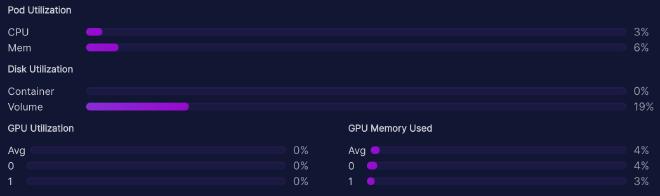

- Loaded with default loader, AutoGPTQ

- 18.78 seconds for processing (296 tokens, 709 chars)

Response

Here’s a Python program that does what you described:

import os

import sys

from collections import defaultdict

def main():

# Check if the ELO scoring file exists

elo_file = "elo_scores.txt"

if not os.path.exists(elo_file):

print("The ELO scoring file doesn't exist. Creating a new one...")

else:

# Continue where the previous session left off, using the ELO scores from the file

with open(elo_file, "r") as f:

elo = defaultdict(int)

for line in f:

if line.strip() == ".nis":

break

else:

opponent_, winner_, score_ = line.split(",", 2)

winner_ = int(winner_)

opponent_ = int

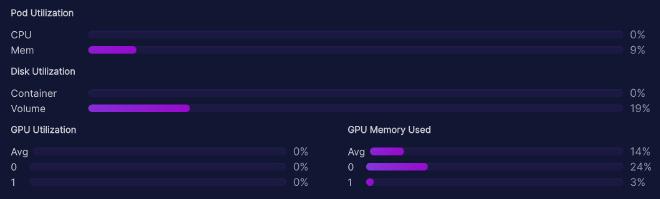

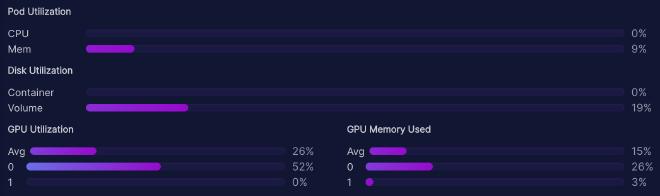

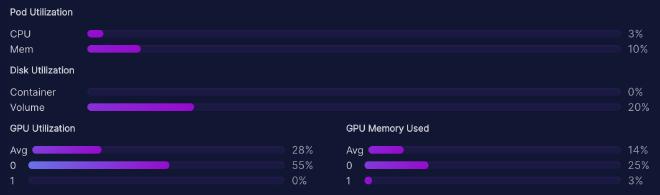

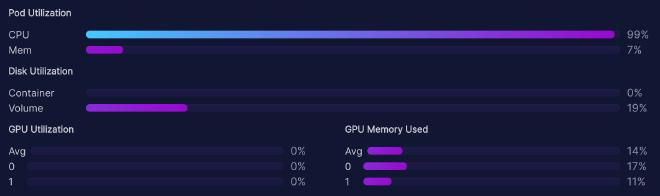

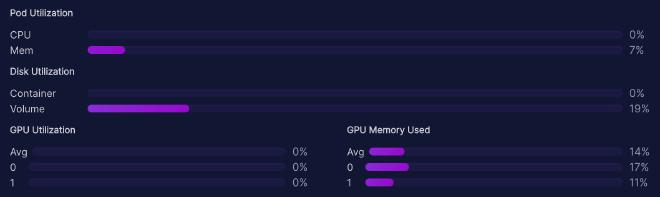

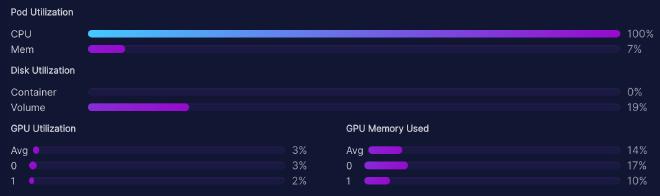

TheBloke/Vicuna-33B-1-3-SuperHOT-8K-fp16 #

- 670 characters produced (243 tokens on Tokenizer)

- 18.35 seconds for processing

- 78% VRAM on load

- 66% utilization during, 80% VRAM during

Response

Here’s a Python program that accomplishes this task using the elo_score library and a JSON file to store the ELO scores:

import sys

from elo import calculate_elo

import json

def main():

# Check if the user wants to resume from an existing ELO score file or start a new tournament

if len(sys.argv) == 2:

filename = sys.argv[1]

load_elo_scores(filename)

else:

filename = "elo_scores.json"

create_elo_scores(filename)

# Read the data from the file and display it two items at a time

items = read_items_and_votes(filename)

total_items = len(items)

for i in range(total_items - 1):

item

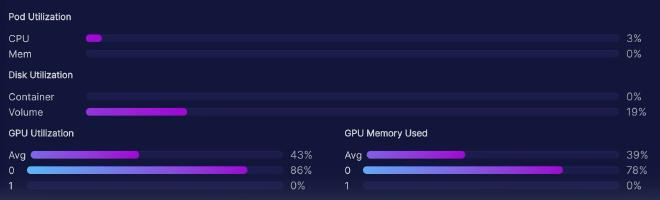

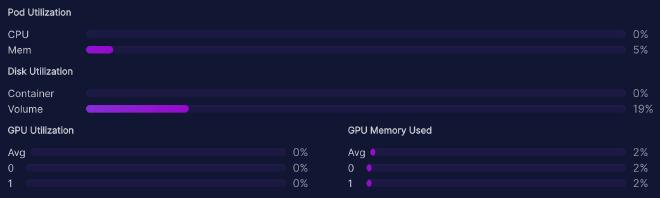

TheBloke/vicuna-33B-GPTQ #

- 18.35s for processing (216 tokens, 675 chars)

Response

Here is a Python program that accomplishes your task using the Elo rating system. This program assumes you have a text file named “ideas.txt” containing the list of items. It will prompt the user to input their choice and update the Elo scores accordingly. If the “elo_scores.txt” file doesn’t exist, it will be created.

import random

def calculate_elo(player_score, opponent_score):

return round((1 + math.sqrt(100 / (player_score + opponent_score))) * 100)

def main():

ideas = []

# Read ideas from "ideas.txt"

with open("ideas.txt", "r") as f:

lines = [line.strip() for line in f]

ideas = [line.strip() for line in lines]

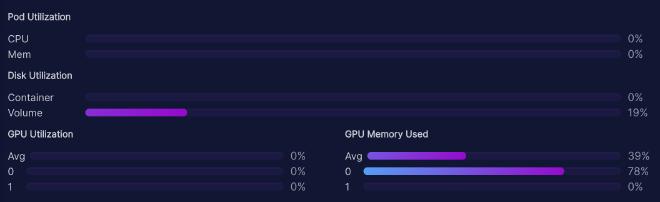

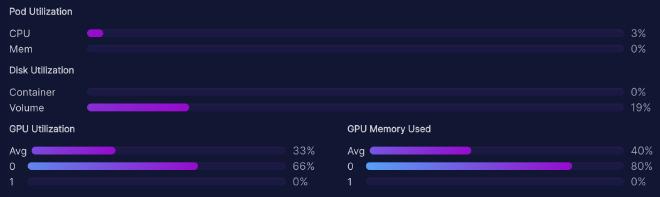

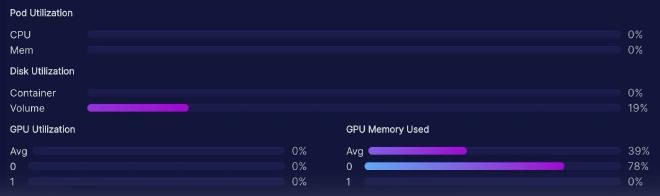

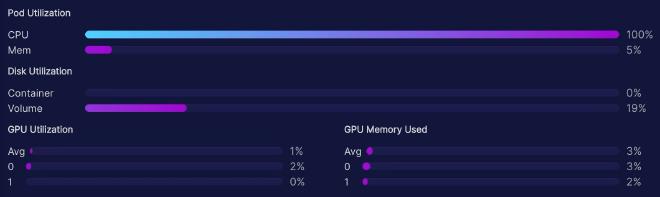

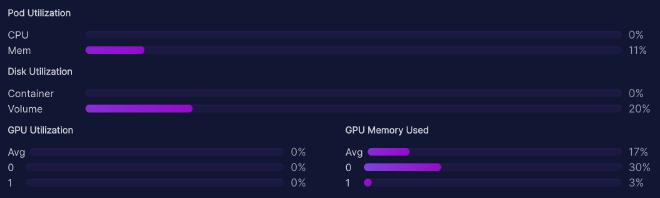

lmsys/vicuna-33b-v1.3 #

- 78% of GPU 1 after loading.

- 28.25s for 200 tokens, 573 characters (242 tokens on Tokenizer)

- 86% first GPU usage during inference

- 3% on CPU usage during inference

Response

Here is a Python program that accomplishes your task using the Elo rating system:

import random

import sys

def read_elo_scores(file_name):

try:

with open(file_name, 'r') as f:

return [int(score) for score in f.readlines()]

except FileNotFoundError:

return []

def write_elo_scores(file_name, elo_scores):

with open(file_name, 'w') as f:

for score in elo_scores:

f.write("%d\n" % score)

def calculate_expected_score(elo1, elo2):

return 1 / (1 + 10 ** ((elo2 - elo1) / 400))

def update_elo_scores(

TheBloke/vicuna-33B-GGML #

- With n-gpu-layers 128 2

- Stopped at 2 mins: 39 tokens in 2 mins, 177 chars

Response

Here’s a Python program that implements the described functionality using the elodic library for voting and Elo scoring. To use this code, you’ll need to install the elodic

TheBloke/Vicuna-33B-1-3-SuperHOT-8K-GGML #

- Test cancelled after first token, took >1 min for first token

Response

Here

Yhyu13/vicuna-33b-v1.3-gptq-4bit #

- Inference didn’t work, stopped after 0 tokens

Response

Test Failed

Conclusion #

If you’re looking for a specific open-source LLM, you’ll see that there are lots of variations of it. GPTQ versions, GGML versions, HF/base versions. Which version should you use? As a general rule: Use GPTQ if you have a lot of VRAM, use GGML if you have minimal VRAM, and use the base HuggingFace model if you want the original model without any possible negligible intelligence loss from quantization.

Note that GGML is working on improved GPU support.

Generally quantized models are both faster and require less VRAM, but they can be very slightly less intelligent.1 4-bit, 5-bit, or 6-bit seem like sweetspots for many use cases.

SuperHOT allows for 8K context size rather than 2K.

Acknowledgements #

Thanks to Eliot, TheBloke, Fiddlenator, Coffee Vampire and DoctorHacks.